How Can Collaborative Tools Improve Online Learning with VR Video?

By Georgie Qiao Jin on

Virtual Reality (VR) has been long touted as a revolutionary technology, offering a unique and immersive learning experience that can transport students to far-flung locations and bring abstract concepts to life. However, one of the biggest challenges for VR adoption has been the high cost of creating VR content. Instructors have to find help from the VR developers or 3D model designers to create the content, because it’s hard to find or create a perfect content to fit into their classes.

With the proliferation of inexpensive panoramic consumer video cameras and various types of video editing software, using 360-degree videos in VR has attracted more attention as an alternative method for instructors building a realistic and immersive environment. It is a “more user-friendly, realistic and affordable” way to create a realistic digital experience compared to developing a simulated VR environment.

Pedagogically, collaboration learning is better than individual learning in many scenarios. This articulates a research gap in the development and empirical investigation of collaboration VR video learning environments.

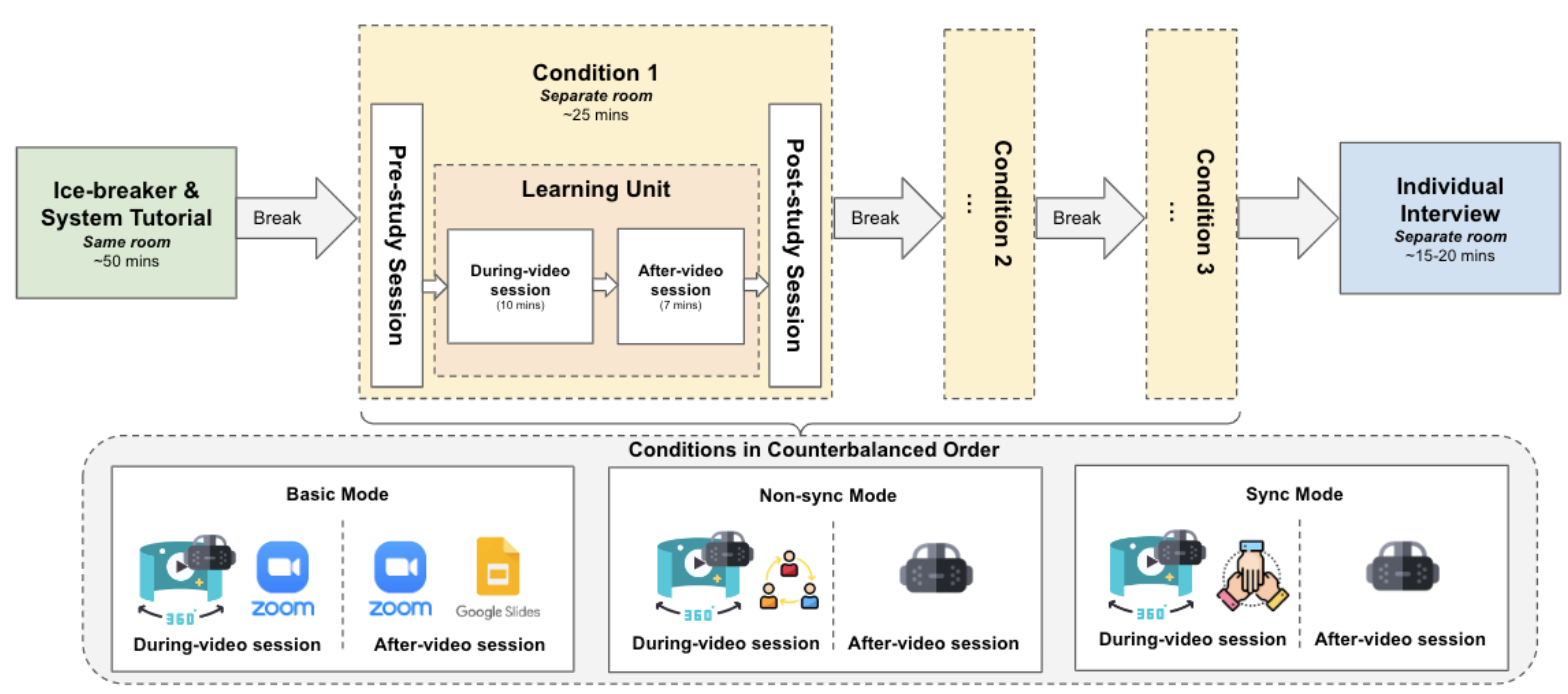

Our work designed two modes to investigate the roles of collaborative tools and shared video control, and compared it with a plain video player (See our demo through the following video). Each mode contains a video viewing system and an after-video platform for further discussion and collaboration. Basic mode uses a conventional VR video viewing system together with an existing widely-available online platform. Non-sync mode includes a collaborative video viewing system with individual control and video timeline and an in-VR platform for after-video discussion. Sync mode contains the same in-VR after-video platform, but students have shared video control.

The study aimed to answer two research questions:

RQ1: How does VR video delivery via existing technology (Basic mode) compare to collaborative VR video delivery (Sync and Non-Sync mode) on measures of knowledge acquisition, collaboration, social presence, cognitive load and satisfaction?

RQ2: How does individual VR video control (Non-sync mode) compare with shared video control (Sync mode) on measures of knowledge acquisition, collaboration, social presence, cognitive load, and satisfaction?

In order to examine the influence of different types of collaborative technology on the perceptions and experiences of online learning, we conducted three conditions within-subject experiment with 54 participants (18 groups (trios)). We collected quantitative data from knowledge assessment, self-reported questionnaires and log files, then we triangulated the validated measures with qualitative data from semi-structured interviews.

For RQ1, we found that collaborative VR-based systems both achieved statistically significantly higher scores on the measures of visual knowledge acquisition, collaboration, social presence, and satisfaction, compared to the baseline system. For qualitative results, participants reported the potential reasons, such as lack of shared context and current technical obstacles (e.g, echos), for lower scores of Basic mode on collaboration and satisfaction. They also appreciated the in-VR platform’s power to transmit and display visuals for after-video discussion, which explained the potential reason for lower scores of Basic mode on the measures of visual knowledge acquisition.

For RQ2, The shared control in Sync Mode significantly increased the ease of collaboration and sense of social presence. In particular, shared control significantly increased the view similarity (where the team was watching the same view of the video) and discussion time during the video. Based on the qualitative results, There were better collaboration experiences with shared control in the Sync mode due to better communication comfort. There was a tension between communication comfort and learning pace flexibility and the control method would influence the perceived usefulness of collaborative tools.

Our work provides implications for design and research on collaborative VR video viewing. One important one is balancing the trade-off between learning pace flexibility and communication comfort based on teaching needs. The expectations for time flexibility and collaboration experience might differ for diverse educational activities and learning scenarios. Therefore, VR collaborative applications should decide whether or not to use shared control based on specific purposes.

Finally, takeaways from this paper:

- Collaborative VR video viewing system can improve visual knowledge acquisition, collaboration, social presence, and satisfaction compared to the conventional system

- Shared video control in VR video viewing can enhance collaboration experiences by increasing communication comfort, but may also reduce learning pace flexibility.

- In-VR platforms for after-video discussion can enhance visual transmission and engagement, and improve the overall learning experience in collaborative VR video environments.

Find more information in our paper here –– coming to CHI 2023!

Cite this paper:

Qiao Jin*, Yu Liu*, Ruixuan Sun, Chen Chen, Puqi Zhou, Bo Han, Feng Qian, and Svetlana Yarosh. 2023, Collaborative Online Learning with VR Video: Roles of Collaborative Tools and Shared Video Control. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (CHI ’23). https://doi.org/10.1145/3544548.3581395