It seems like every day there is a new gig work platform (e.g. UpWork, Uber, Airbnb, or Rover) that uses a 5-star scale to rate workers. This helps workers build reputation and develop the trust necessary for gig work interactions, but there is a big concern: lots of prior work finds that race and gender biases occur when people evaluate each other. In an upcoming paper at the 2018 ACM CSCW conference, we describe what we thought would be a straightforward study of race and gender biases in 5-star reputation systems. However, it turned into an exercise in repeated experimentation to verify surprising results and careful statistical analysis to better understand our findings. Ultimately, we ended up with a future research agenda composed of compelling new hypotheses about race, gender and five-star rating scales.

We expected that race and gender biases would occur in gig work reputation systems, so we set out to study this issue and figure out how to address it. We planned to write our paper – and this blog post – about approaches to minimize biases in reputation systems. We were so sure these biases would occur that one author suggested we maybe didn’t even need to measure them (luckily, we did).

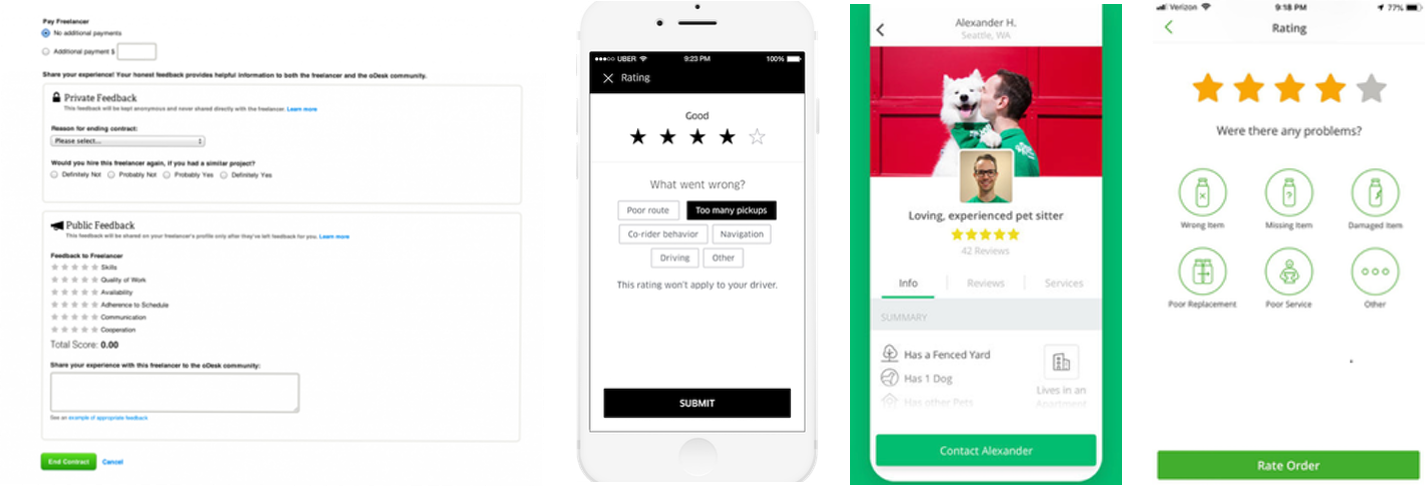

As academic researchers, we can’t experiment in live gig work systems like companies such as UpWork and Uber can. Instead, we designed an experimental system and tested it on Mechanical Turk. We showed participants writing critiques (in analogy to ‘editing’ tasks on UpWork), and asked them to evaluate work done by a (simulated) gig worker, where we randomized the (simulated) race and gender of the worker.

Except: our experiment identified no significant bias. Now, your first reaction is probably “They did something wrong”; ours certainly was. Of course, this may be the case (see below for limitations), but we found that participants reliably distinguished low quality (simulated) work from high quality work, suggesting our experimental design functioned as designed. These findings confused us. Were they a fluke? If we ran the study again, would they persist?

To establish the reliability of our findings, we ran three more experiments, each changing a different aspect of our study design: (i) increasing ecological validity, (ii) moving to a within-subjects design, and (iii) using a different task entirely. None of our three additional experiments identified significant bias. And yet in all three cases, participants consistently differentiated low and high quality work.

Because so much research from fields like psychology, education, and business has found that gender and race biases occur in this type of context, we wanted to understand how our results happened. We took a methodologically conservative approach to understanding our results, which includes three fundamental concepts: replicating the lack of bias (mentioned above), being statistically confident in the lack of bias, and providing carefully considered testable hypotheses to help guide future work.

Just because statistical tests did not detect bias does not mean bias did not exist. For instance, maybe our data exhibited bias, but we just couldn’t measure it? Four replicated results suggested that this was not the case, but we needed to be confident about the lack of bias. To do this, we had to pick an upper bound for potential bias, which we did based on average-rating patterns and deactivation thresholds used in systems like Uber. We used a Bayesian statistical approach to investigate how likely it was that our data actually could reflect a ratings difference between race or gender groups larger than 0.2 stars. We ran this analysis for all four of our experiments, and found that if bias exists in our data, it is unlikely to be larger than 0.2 stars. The probability of bias that large was never higher than 20% and sometimes was as low as 1%.

At this point, we were stumped. Even though prior work found bias when people evaluate one another, our four experiments found no bias, and our statistical check showed it is unlikely that our data exhibits bias. So we went back to the metaphorical drawing board to try to understand why our results occurred. After all, we may have uncovered a context in which bias does not occur, and regardless of whether this is the case, our surprising results strongly suggest important future directions of research on ratings bias.To this end, we consulted with experts from other fields, and returned to the relevant literature. We came up with three categories of hypotheses that might help explain our results and that should be explored. We’re honestly not sure which we believe is most likely!

The gig economy does not show gender or racial biases in ratings.

While this is the simplest explanation, there is reason to question its validity. Recent literature [1] suggests that at least in some cases, bias is observed in gig work systems. We think more work is needed here.

Mechanical Turk workers are WEIRD(ish).

The results of many psychological studies are influenced by the fact that their participants are WEIRD (Western, Educated, Industrialized, Rich, Democratic) – who participants are influences research outcomes. In our context, this might mean two possible things:

- Mechanical Turk workers are not representative of gig economy consumers, even if they are representative of the general population.

- Because Mechanical Turk workers are crowdworkers themselves, they may be the wrong population to experiment with race- or gender-biases in rating interfaces for crowdwork.

Something about our experimental setup prevented bias from showing up.

We have a couple of ideas here:

- First, we used “third-party” evaluations – i.e., our participants evaluated work done for someone else – so maybe the participants were not engaged enough to exhibit bias. This seems intuitive, but prior research (e.g. [2]) with similarly-constructed experiments do show bias.

- Second, perhaps the way we selected photos for our simulated crowdworkers limited the existence of bias, and that more natural photos would lead to race- and gender-based biases. We think that understanding the mechanisms of bias is an important direction of future experimentation.

We go into a lot of detail about our methods, our studies, and the rationale for our hypothesis in our paper (pre-print). Please check it out, and join in the conversation.

- Hannák, Anikó, Claudia Wagner, David Garcia, Alan Mislove, Markus Strohmaier, and Christo Wilson. 2017. “Bias in Online Freelance Marketplaces: Evidence from TaskRabbit and Fiverr.” http://claudiawagner.info/publications/cscw_bias_olm.pdf.

- Bigoness, William J. 1976. “Effect of Applicant’s Sex, Race, and Performance on Employers’ Performance Ratings: Some Additional Findings.” Journal of Applied Psychology 61 (1): 80–84. https://doi.org/10.1037/0021-9010.61.1.80.