The English version of Wikipedia contains over 6.5 million articles… but only 0.09% of them have received Wikipedia’s highest quality rating. In other words, there’s still a lot of work to be done.

But where to start?

A group of highly experienced Wikipedia editors tried to answer that question. Through extensive discussion and consensus-building, they manually compiled lists of Vital Articles (VA) that should be prioritized for improvement. We analyzed their discussions to try to identify values they brought to the table in making those decisions. We found––among other things––a desire for Wikipedia to be “balanced”, including along gender lines. Wikipedia has long been criticized for its gender imbalance, so this was encouraging!

But how is this value reflected in the actual prioritization decisions in the lists of Vital Articles these editors developed?

Not so much.

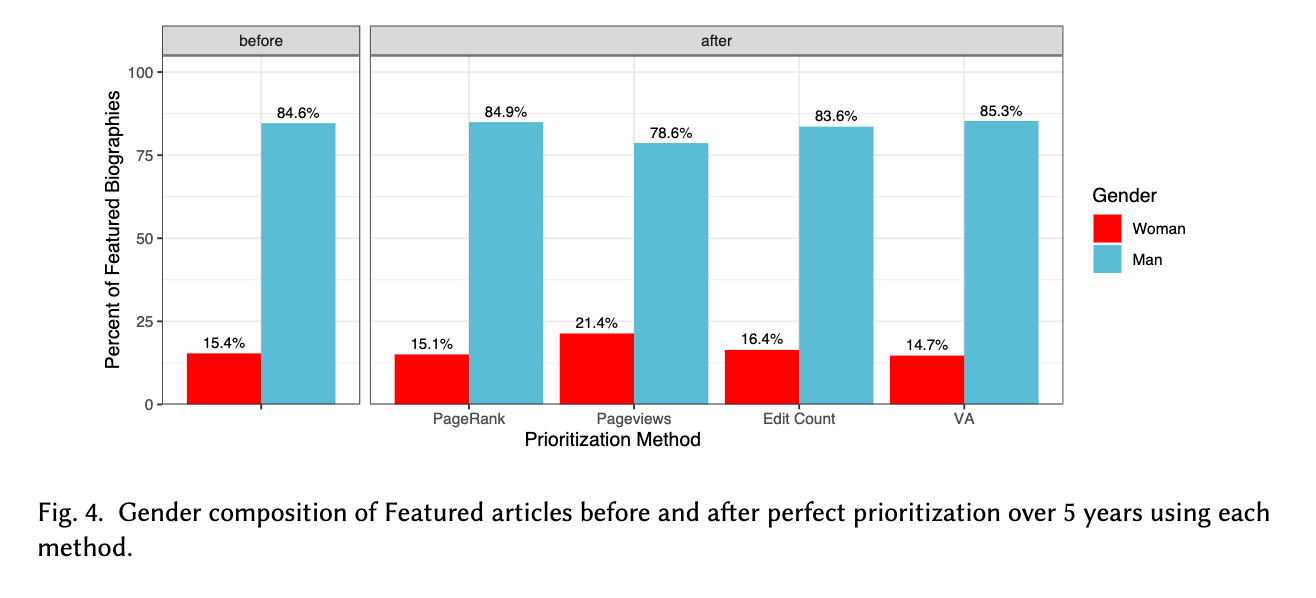

Figure 4 from our paper shows what would happen if editors were to use Vital Articles to prioritize work on biographies: the proportion of highest quality biographies about women would decrease––from 15.4% to 14.7%. By contrast, using pageviews (which indicate reader interest) to prioritize work would result in an increase in the proportion to 21.4%.

In short, if you want more gender balance, just prioritize what readers happen to read––not what a devoted group of editors painstakingly curated over several years with gender balance as one of the goals in mind. So what gives? Are Wikipedians just pretending to care about gender balance?

Not quite.

As it happens, only 7.5% of VA’s participants self-identify as women. For reference, that figure was 12.9% on all of English Wikipedia at the time we collected our data. Prior work gives plenty of evidence to help explain why a heavily male-skewed group of editors might have failed to include enough articles about women despite good intentions. Some of the reasons are quite intuitive too; as one Wikipedian put it, “On one hand, I’m surprised [the Menstruation article] isn’t here, but then as one of the x-deficient 90% of editors, I wouldn’t have even thought to add it.”

The takeaway: when it comes to prioritizing content, skewed demographics might prevent the Wikipedia editor community from fully enacting its own values. However, this effect is not the same for all community values; we find that VA would actually be a great prioritization tool for increasing geographical parity on Wikipedia. As for why? We have some ideas…

But for more on that (and other cool findings from our work), you’ll have to check out our research paper on this topic––coming to CSCW 2022! You can find the arXiv preprint here.